Editor

Celine Low chevron_right

OpenAI will launching ChatGPT-5 soon and it wants the chatbot to stop enabling users' unhealthy behaviours.

Earlier this year, the company reversed an update to GPT-4o that made the bot overly agreeable. Users have shared worrying conversations where GPT-4o, in one instance, endorsed and gave instructions for terrorism, according to NBC News. The Verge also reported multiple instances of people who say their loved ones have experienced mental health crises in situations where using the chatbot seemed to have an amplifying effect on their delusions.

I've stopped taking my medications, and I left my family because I know they made the radio signals come through the walls. https://t.co/u2XMIkaOx6 pic.twitter.com/M3fUPaSq2B

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) April 28, 2025

New Tweaks To Help With Mental Health

“There have been instances where our 4o model fell short in recognising signs of delusion or emotional dependency,” OpenAI wrote in an announcement. “While rare, we’re continuing to improve our models and are developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately and point people to evidence-based resources when needed.”

OpenAI shared that it worked with more than 90 physicians across dozens of countries to craft custom rubrics for “evaluating complex, multi-turn conversations.” It’s also seeking feedback from researchers and clinicians who are helping to refine evaluation methods and stress-test safeguards for ChatGPT.

The company is also forming an advisory group made up of experts in mental health, youth development and human-computer interaction. More information will be released as the work progresses, OpenAI wrote.

This update comes from OpenAI's ongoing efforts to prevent ChatGPT users from actively seeking out the chatbot as a source for emotional validation and continuously viewing it as a therapist or a friend. The company announced in April that it has revised its training techniques to "explicitly steer the model away from insincere compliments" or flattery.

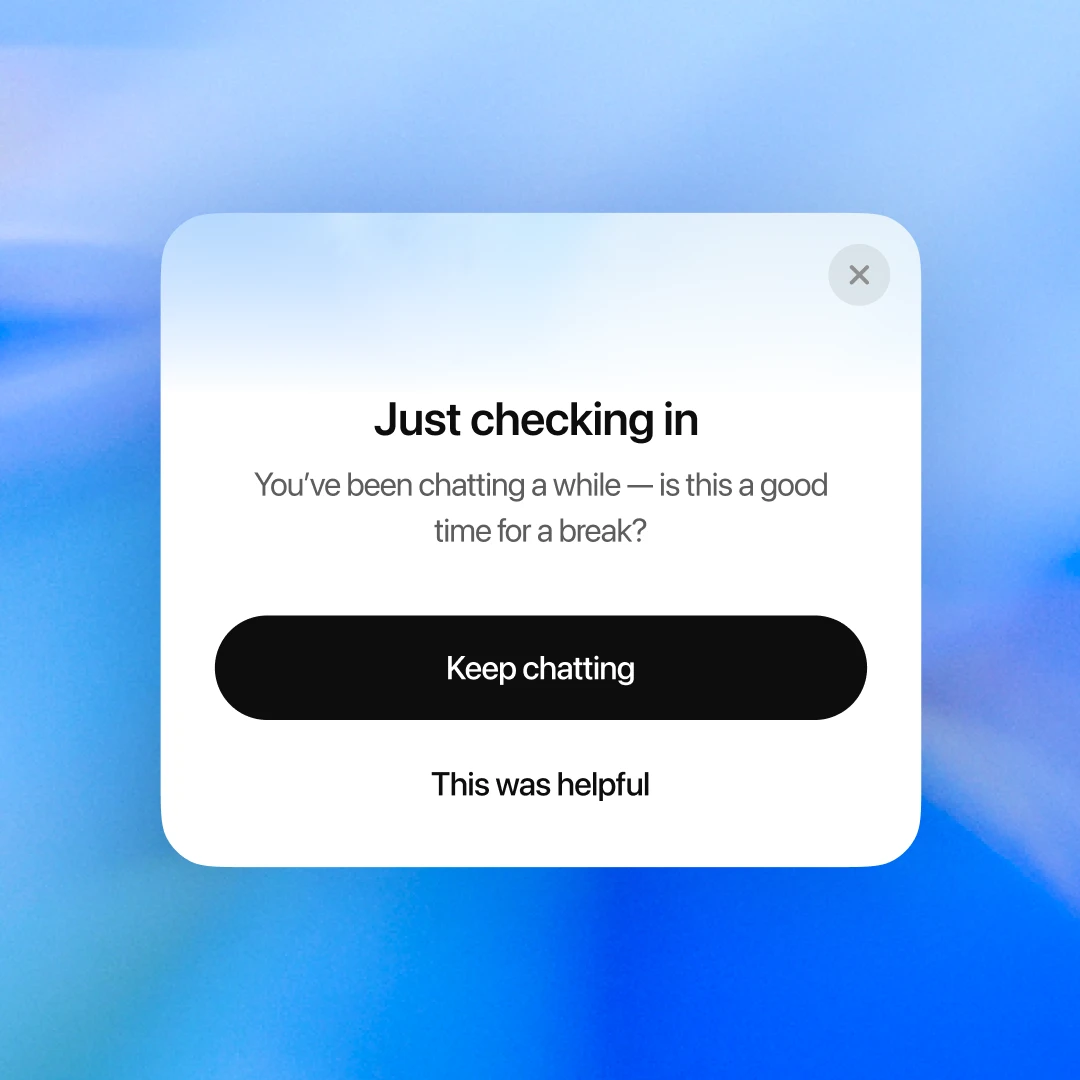

ChatGPT will prompt users with gentle reminders to take breaks from lengthy conversations. In another change, ChatGPT will help you solve a personal dilemma rather than giving a straight answer. It should help you think it through — asking questions, weighing pros and cons.

"New behaviour for high-stakes personal decisions is rolling out soon," the company said.

In a recent interview with podcaster Theo Von, OpenAI CEO Sam Altman expressed some concern over people using ChatGPT as a therapist or life coach.

He said that legal confidentiality protections between doctors and their patients, or between lawyers and their clients, don’t apply in the same way to chatbots.

“So if you go talk to ChatGPT about your most sensitive stuff, and then there’s a lawsuit or whatever, we could be required to produce that. And I think that’s very screwed up,” Altman said. “I think we should have the same concept of privacy for your conversations with AI that we do with a therapist or whatever. And no one had to think about that even a year ago.”

Stay updated with ProductNation on here, Instagram & TikTok as well.

Read more related news here: